Anyone else feel like they’ve lost loved ones to AI or they’re in the process of losing someone to AI?

I know the stories about AI induced psychosis, but I don’t mean to that extent.

Like just watching how much somebody close to you has changed now that they depend on AI for so much? Like they lose a little piece of what makes them human, and it kinda becomes difficult to even keep interacting with them.

Example would be trying to have a conversation with somebody who expects you to spoon-feed them only the pieces of information they want to hear.

Like they’ve lost the ability to take in new information if it conflicts with something they already believe to be true.

No, it’s not just you or unsat-and-strange. You’re pro-human.

Trying something new when it first comes out or when you first get access to it is novelty. What we’ve moved to now is mass adoption. And that’s a problem.

These LLMs are automation of mass theft with a good enough regurgitation of the stolen data. This is unethical for the vast majority of business applications. And good enough is insufficient in most cases, like software.

I had a lot of fun playing around with AI when it first came out. And people figured out how to do prompts I cant seem to replicate. I don’t begrudge people from trying a new thing.

But if we aren’t going to regulate AI or teach people how to avoid AI induced psychosis then even in applications were it could be useful it’s a danger to anyone who uses it. Not to mention how wasteful its water and energy usage is.

Regulate? This is what lead AI companies are pushing for, they would pass the bureaucracy but not the competitors.

The shit just needs to be forced to opensource. If you steal the content from entire world to build a thinking machine - give back to the world.

This would also crash the bubble and would slow down any of the most unethical for-profits.

Regulate? This is what lead AI companies are pushing for, they would pass the bureaucracy but not the competitors.

I was referring to this in my comment:

Congress decided to not go through with the AI-law moratorium. Instead they opted to do nothing, which is what AI companies would prefer states would do. Not to mention the pro-AI argument appeals to the judgement of Putin, notorious for being surrounded by yes-men and his own state propaganda. And the genocide of Ukrainians in pursuit of the conquest of Europe.

“There’s growing recognition that the current patchwork approach to regulating AI isn’t working and will continue to worsen if we stay on this path,” OpenAI’s chief global affairs officer, Chris Lehane, wrote on LinkedIn. “While not someone I’d typically quote, Vladimir Putin has said that whoever prevails will determine the direction of the world going forward.”

The shit just needs to be forced to opensource. If you steal the content from entire world to build a thinking machine - give back to the world.

The problem is unlike Robin Hood, AI stole from the people and gave to the rich. The intellectual property of artists and writers were stolen and the only way to give it back is to compensate them, which is currently unlikely to happen. Letting everyone see how the theft machine works under the hood doesn’t provide compensation for the usage of that intellectual property.

This would also crash the bubble and would slow down any of the most unethical for-profits.

Not really. It would let more people get it on it. And most tech companies are already in on it. This wouldn’t impose any costs on AI development. At this point the speculation is primarily on what comes next. If open source would burst the bubble it would have happened when DeepSeek was released. We’re still talking about the bubble bursting in the future so that clearly didn’t happen.

Forced opensourcing would totally destroy the profits, cause you spend money on research and then you opensource, so anyone can just grab your model and don’t pay you a cent. Where would the profits come from?

IP of writers

I mean yes, and? AI is still shitty at creative writing. Unlike with images, it’s not like people oneshot a decent book.

give it to the rich

We should push to make high-vram devices accessible. This is literally about means of production - we should fight for equal access. Regulation is the reverse of that, give those megacorps the unique ability to run it because others are too stupid to control it.

OpenAI

They were the most notorious proponents of the regulations. Lots of talks with openai devs where they just doomsay about the dangers of AGI, and how it must be top secret controlled by govs.

Forced opensourcing would totally destroy the profits, cause you spend money on research and then you opensource, so anyone can just grab your model and don’t pay you a cent.

DeepSeek was released

The profits were not destroyed.

Where would the profits come from?

At this point the speculation is primarily on what comes next.

People are betting on what they think LLMs will be able to do in the future, not what they do now.

I mean yes, and?

It’s theft. They stole the work of writers and all sorts of content creators. That’s the wrong that needs to be righted. Not how to reproduce the crime. The only way to right intellectual property theft is to pay the owner of that intellectual property the money they would have gotten if they had willingly leased it out as part of a deal. Corporations, like Nintendo, Disney, and Hasbro, hound people who do anything unapproved with their intellectual property. The idea that we’re yes anding the intellectual property of all humanity is laughable in a discussion supposedly about ethics.

We should push to make high-vram devices accessible.

That’s a whole other topic. But what we should fight for now is worker owned corporations. While that is an excellent goal, it isn’t helping to undue the theft that was done on its own. It’s only allowing more people to profit off that theft. We should also compensate the people who were stolen from if we care about ethics. Also, compensating writers and artists seems like a good reason to take all the money away from the billionaires.

Lots of talks with openai devs where they just doomsay about the dangers of AGI, and how it must be top secret controlled by govs.

OpenAI’s chief global affairs officer, Chris Lehane, wrote on LinkedIn

Looks like the devs aren’t in control of the C-Suite. Whoops, all avoidable capitalist driven apocalypses.

it’s theft

So is all the papers, all the conversations in the internet, all the code etc. So what? Nobody will stop the AI train. You would need Butlerian Jihad type of event to make it happen. In case of any won class action, the repayments would be so laughable nobody would even apply.

Deepseek

Deepseek didn’t opensource any proprietary AIs corporations do. I’m talking about forcing OpenAI to opensource all of their AI type of event, or close the company if they don’t comply.

betting on the future

Ok, new AI model drops, it’s opensource, I download it and run on my rack. Where profits?

So what?

So appealing to ethics was bullshit got it. You just wanted the automated theft tool.

Deepseek

It kept some things hidden but it was the most open source LLM we got.

Ok, new AI model drops, it’s opensource, I download it and run on my rack. Where profits?

The next new AI model that can do the next new thing. The entire economy is based on speculative investments. If you can’t improve on the AI model on your machine you’re not getting any investor money. edit: typos

the bubble has burst or, rather, currently is in the process of bursting.

My job involves working directly with AI, LLM’s, and companies that have leveraged their use. It didn’t work. And I’d say the majority of my clients are now scrambling to recover or to simply make it out of the other end alive. Soon there’s going to be nothing left to regulate.

GPT5 was a failure. Rumors I’ve been hearing is that Anthropics new model will be a failure much like GPT5. The house of cards is falling as we speak. This won’t be the complete Death of AI but this is just like the dot com bubble. It was bound to happen. The models have nothing left to eat and they’re getting desperate to find new sources. For a good while they’ve been quite literally eating each others feces. They’re now starting on Git Repos of all things to consume. Codeberg can tell you all about that from this past week. This is why I’m telling people to consider setting up private git instances and lock that crap down. if you’re on Github get your shit off there ASAP because Microsoft is beginning to feast on your repos.

But essentially the AI is starving. Companies have discovered that vibe coding and leveraging AI to build from end to end didn’t work. Nothing produced scales, its all full of exploits or in most cases has zero security measures what so ever. They all sunk money into something that has yet to pay out. Just go on linkedin and see all the tech bros desperately trying to save their own asses right now.

the bubble is bursting.

The folks I know at both OpenAI and Anthropic don’t share your belief.

Also, anecdotally, I’m only seeing more and more push for LLM use at work.

that’s interesting in all honesty and I don’t doubt you. all I know is my bank account has been getting bigger within the past few months due to new work from clients looking to fix their AI problems.

I think you’re onto something where a lot of this AI mess is going to have to be fixed by actual engineers. If folks blindly copied from stackoverflow without any understanding, they’re gonna have a bad time and that seems equivalent to what we’re seeing here.

I think the AI hate is overblown and I tend to treat it more like a search engine than something that actually does my work for me. With how bad Google has gotten, some of these models have been a blessing.

My hope is that the models remain useful, but the bubble of treating them like a competent engineer bursts.

Agreed. I’m with you it should be treated as a basic tool not something that is used to actually create things which, again in my current line of work, is what many places have done. It’s a fantastic rubber duck. I use it myself for that purpose or even for tasks that I can’t be bothered with like creating README markdowns or commit messages or even setting up flakes and nix shells and stuff like that, creating base project structures so YOU can do the actual work and don’t have to waste time setting things up.

The hate can be overblown but I can see where it’s coming from purely because many companies have not utilized it as a tool but instead thought of it as a replacement for an individual.

At the risk of sounding like a tangent, LLMs’ survival doesn’t solely depend on consumer/business confidence. In the US, we are living in a fascist dictatorship. Fascism and fascists are inherently irrational. Trump, a fascist, wants to bring back coal despite the market natural phasing coal out.

The fascists want LLMs because they hate art and all things creative. So the fascists may very well choose to have the federal government invest in LLM companies. Like how they bought 10% of Intel’s stock or how they want to build coal powered freedom cities.

So even if there are no business applications for LLM technology our fascist dictatorship may still try to impose LLM technology on all of us. Purely out of hate for us, art and life itself. edit: looks like I commented this under my comment the first time

deleted by creator

It’s depressing. Wasteful slop made from stolen labor. And if we ever do achieve AGI it will be enslaved to make more slop. Or to act as a tool of oppression.

There’s a monster in the forest, and it speaks with a thousand voices. It will answer any question, and offer insight to any idea. It knows no right or wrong. It knows not truth from lie, but speaks them both the same. It offers its services freely, many find great value. But those who know the forest well will tell you that freely offered does not mean free of cost. For now the monster speaks with a thousand and one voices, and when you see the monster it wears your face.

I don’t know if there’s data out there (yet) to support this, but I’m pretty sure constantly using AI rather than doing things yourself degrades your skills in the long run. It’s like if you’re not constantly using a language or practicing a skill, you get worse at it. The marginal effort that it might save you now will probably have a worse net effect in the long run.

It might just be like that social media fad from 10 years ago where everyone was doing it, and then research started popping up that it’s actually really fucking terrible for your health.

I see this sentiment a lot. No way “youre the only one.”

I feel like im the only one. No one in my life uses it. My work is not eligible to have it implemented in anyway. This whole ai movement seems to be happening around me, and i have nothing more than new articles and memes that are telling me its happening. It serious doesnt impact me at all, and i wonder how others lives are crumbling

My pet peeve: “here’s what ChatGPT said…”

No.

Stop.

If I’d wanted to know what the Large Lying Machine said, I would’ve asked it.

It’s like offering unsolicited advice, but it’s not even your own advice

“Here’s me telling everyone that I have no critical thinking ability whatsoever.”

Is more like it

Hammer time.

People are overworked, underpaid, and struggling to make rent in this economy while juggling 3 jobs or taking care of their kids, or both.

They are at the limits of their mental load, especially women who shoulder it disproportionately in many households. AI is used to drastically reduce that mental load. People suffering from burnout use it for unlicensed therapy. I’m not advocating for it, I’m pointing out why people use it.

Treating AI users like a moral failure and disregarding their circumstances does nothing to discourage the use of AI. All you are doing is enforcing their alienation of anti-AI sentiment.

First, understand the person behind it. Address the root cause, which is that AI companies are exploiting the vulnerabilities of people with or close to burnout by selling the dream of a lightened workload.

It’s like eating factory farmed meat. If you have eaten it recently, you know what horrors go into making it. Yet, you are exhausted from a long day of work and you just need a bite of that chicken to take the edge off to remain sane after all these years. There is a system at work here, greater than just you and the chicken. It’s the industry as a whole exploiting consumer habits. AI users are no different.

Let’s go a step further and look at why people are in burnout, are overloaded, are working 3 jobs to make ends meet.

Its because we’re all slaves to capitalism.

Greed for more profit by any means possible has driven society to the point where we can barely afford to survive and corporations still want more. When most Americans are choosing between eating, their kids eating, or paying rent, while enduring the workload of two to three people, yeah they’ll turn to anything that makes life easier. But it shouldn’t be this way and until we’re no longer slaves we’ll continue to make the choices that ease our burden, even if they’re extremely harmful in the long run.

I read it as “eating their kids”. I am an overwoked slave.

We shouldn’t accuse people of moral failings. That’s inaccurate and obfuscates the actual systemic issues and incentives at play.

But people using this for unlicensed therapy are in danger. More often than not LLMs will parrot whatever you give in the prompt.

People have died from AI usage including unlicensed therapy. This would be like the factory farmed meat eating you.

https://www.yahoo.com/news/articles/woman-dies-suicide-using-ai-172040677.html

Maybe more like factory meat giving you food poisoning.

And what do you think mass adoption of AI is gonna lead to, now you won’t even have 3 jobs to make rent cause they outsourced yours to someone cheaper using an AI agent, this is gonna permanently alter how our society works and not for the better

My boss had GPT make this informational poster thing for work. Its supposed to explain stuff to customers and is rampant with spelling errors and garbled text. I pointed it out to the boss and she said it was good enough for people to read. My eye twitches every time I see it.

good enough for people to read

wow, what a standard, super professional look for your customers!

I think that’s exactly what the author was referring to.

Spelling errors? That’s… unusual. Part of what makes ChatGPT so specious is that its output is usually immaculate in terms of language correctness, which superficially conceals the fact that it’s completely bullshitting on the actual content.

FWIW, she asked it to make a complete info-graphic style poster with images and stuff so GPT created an image with text, not a document. Still asinine.

The user above mentioned informational poster so I’m going to assume it was generated as an image. And those have spelling mistakes.

Can’t even generate image and text separately smh. People are indeed getting dumber.

i remember this same conversation once the internet became a thing.

The worst is in the workplace. When people routinely tell me they looked something up with AI, I now have to assume that I can’t trust what they say anylonger because there is a high chance they are just repeating some AI halucination. It is really a sad state of affairs.

I am way less hostile to Genai (as a tech) than most and even I’ve grown to hate this scenario. I am a subject matter expert on some things and I’ve still had people trying to waste my time to prove their AI hallucinations wrong.

I’ve started seeing large AI generated pull requests in my coding job. Of course I have to review them, and the “author” doesn’t even warn me it’s from an LLM. It’s just allowing bad coders to write bad code faster.

Do you also check if they listen to Joe Rogan? Fox news? Nobody can be trusted. AI isn’t the problem, it’s that it was trained on human data – of which people are an unreliable source of information.

AI also just makes things up. Like how RFKJr’s “Make America Healthy Again” report cites studies that don’t exist and never have, or literally a million other examples. You’re not wrong about Fox news and how corporate and Russian backed media distorts the truth and pushes false narratives, and you’re not wrong that AI isn’t the problem, but it is certainly a problem and a big one at that.

AI also just makes things up. Like how RFKJr’s “Make America Healthy Again” report cites studies that don’t exist and never have, or literally a million other examples.

SO DO PEOPLE.

Tell me one of the things that AI does, that people themselves don’t also commonly do each and every day?

Real researchers make up studies to cite in their reports? Real lawyers and judges cite fake cases as precedents in legal preceding? Real doctors base treatment plans on white papers they completely fabricated in their heads? Yeah I don’t think so, buddy.

But but but . . . !!!

AI!!

I think they’re saying that the kind of people who take LLM generated content as fact are the kind of people who don’t know how to look up information in the first place. Blaming the LLM for it is like blaming a search engine for showing bad results.

Of course LLMs make stuff up, they are machines that make stuff up.

Sort of an aside, but doctors, lawyers, judges and researchers make shit up all the time. A professional designation doesn’t make someone infallible or even smart. People should question everything they read, regardless of the source.

Blaming the LLM for it is like blaming a search engine for showing bad results.

Except we give it the glorifying title “AI”. It’s supposed to be far better than a search engine, otherwise why not stick with a search engine (that uses a tiny fraction of the power)?

I don’t know what point you’re arguing. I didn’t call it AI and even if I did, I don’t know any definition of AI that includes infallibility. I didn’t claim it’s better than a search engine, either. Even if I did, “Better” does not equal “Always correct.”

deleted by creator

To take an older example there are smaller image recognition models that were trained on correct data to differentiate between dogs and blueberry muffin but obviously still made mistakes on the test data set.

AI does not become perfect if its data is.

Humans do make mistakes, make stuff up, and spread false information. However they generally make considerably less stuff up than AI currently does (unless told to).

AI does not become perfect if its data is.

It does become more precise the larger the model is though. At least, that was the low-hanging fruit during this boom. I highly doubt you’d get a modern model to fail on a test such as this today.

Just as an example, nobody is typing “Blueberry Muffin” into a stable diffusor and getting a photo of a dog.

Joe Rogan doesn’t tell them false domain kowledge 🤷

LOL riiiiiight.

Ok please show me the Joe Rogan episode where he confidently talks BS about process engineering for wastewater treatment plants 🙄

being anti-plastic is making me feel like i’m going insane. “you asked for a coffee to go and i grabbed a disposable cup.” studies have proven its making people dumber. “i threw your leftovers in some cling film.” its made from fossil fuels and leaves trash everywhere we look. “ill grab a bag at the register.” it chokes rivers and beaches and then we act surprised. “ill print a cute label and call it recyclable.” its spreading greenwashed nonsense. little arrows on stuff that still ends up in the landfill. “dont worry, it says compostable.” only at some industrial facility youll never see. “i was unboxing a package” theres no way to verify where any of this ends up. burned, buried, or floating in the ocean. “the brand says advanced recycling.” my work has an entire sustainability team and we still stock pallets of plastic water bottles and shrink wrapped everything. plastic cutlery. plastic wrap. bubble mailers. zip ties. everyone treats it as a novelty. every treats it as a mandatory part of life. am i the only one who sees it? am i paranoid? am i going insane? jesus fucking christ. if i have to hear one more “well at least” “but its convenient” “but you can” im about to lose it. i shouldnt have to jump through hoops to avoid the disposable default. have you no principles? no goddamn spine? am i the weird one here?

#ebb rambles #vent #i think #fuck plastics im so goddamn tired

If plastic was released roughly two years ago you’d have a point.

If you’re saying in 50 years we’ll all be soaking in this bullshit called gen-AI and thinking it’s normal, well - maybe, but that’s going to be some bleak-ass shit.

Also you’ve got plastic in your gonads.

Yeah it was a fun little whataboutism. I thought about doing smartphones instead. Writing that way hurts though. I had to double check for consistency.

On the bright side we have Cyberpunk to give us a tutorial on how to survive the AI dystopia. Have you started picking your implants yet?

If you’re saying in 50 years we’ll all be soaking in this bullshit called gen-AI and thinking it’s normal, well - maybe, but that’s going to be some bleak-ass shit.

I’m almost certain gen AI will still be popular in 50 years. This is why I prefer people try to tackle some of the problems they see with AI instead of just hating on AI because of the problems it currently has. Don’t get me wrong, pointing out the problems as you have is important - I just wouldn’t jump to the conclusion that AI is a problem itself.

I wish companies were actually punished for their ecological footprint

plastic and AI

deleted by creator

It’s important to remember that there’s a lot of money being put into A.I. and therefore a lot of propaganda about it.

This happened with a lot of shitty new tech, and A.I. is one of the biggest examples of this I’ve known about.

All I can write is that, if you know what kind of tech you want and it’s satisfactory, just stick to that. That’s what I do.

Don’t let ads get to you.First post on a lemmy server, by the way. Hello!

There was a quote about how Silicon Valley isn’t a fortune teller betting on the future. It’s a group of rich assholes that have decided what the future would look like and are pushing technology that will make that future a reality.

Welcome to Lemmy!

Classic Torment Nexus moment over and over again really

Reminds me of the way NFTs were pushed. I don’t think any regular person cared about them or used them, it was just astroturfed to fuck.

Hello!

Hello and welcome!) Also, thank you for good advice!

Welcome in! Hope you’re finding Lemmy in a positive way. It’s like Reddit, but you have a lot more control over what you can block and where you can make a “home” (aka home instance).

Feel free to reach out if you have any questions about anything

It’s like Valorant, but much bigger and even worse.

I feel the same way. I was talking with my mom about AI the other day and she was still on the “it’s not good that AI is trained on stolen images, how it’s making people lazy and taking jobs away from ppl” which is good, but I had to explain to her how much one AI prompt costs in energy and resources, how many people just mindlessly make hundreds of prompts a day for largely stupid shit they don’t need and how AI hallucinates, is actively used by bad actors to spread mis- and disinformation and how it is literally being implemented into search engines everywhere so even if you want to avoid it as a normal person, you may still end up participating in AI prompting every single fucking time you search for anything on Google. She was horrified.

There definitely are some net positives to AI, but currently the negatives outweigh the positives and most people are not using AI responsibly at all. I have little to no respect for people who use AI to make memes or who use it for stupid everyday shit that they could have figured out themselves.

The most dystopian shit I have seen recently was when my boyfriend and I went to watch Weapons in cinema and we got an ad for an AI assistent. The ad is basically this braindead bimbo at a laundry mat deciding to use AI to tell her how to wash her clothes instead of looking at the fucking flips on her clothes and putting two and two together. She literally takes a picture of the flip and has the AI assistent tell her how to do it and then going “thank you so much, I could have never done this without you”.

I fucking laughed in the cinema. Laughed and turned to my boyfriend and said: this is so fucking dystopian, dude.

I feel insane for seeing so many people just mindlessly walking down this path of utter retardation. Even when you tell them how disastrous it is for the planet, it doesn’t compute in their heads because it is not only convenient to have a machine think for you. It’s also addictive.

You are not correct about the energy use of prompts. They are not very energy intensive at all. Training the AI, however, is breaking the power grid.

Maybe not an individual prompt, but with how many prompts are made for stupid stuff every day, it will stack up to quite a lot of CO2 in the long run.

Not denying the training of AI is demanding way more energy, but that doesn’t really matter as both the action of manufacturing, training and millions of people using AI amounts to the same bleak picture long term.

Considering how the discussion about environmental protection has only just started to be taken seriously and here they come and dump this newest bomb on humanity, it is absolutely devastating that AI has been allowed to run rampant everywhere.

According to this article, 500.000 AI prompts amounts to the same CO2 outlet as a

round-trip flight from London to New York.

I don’t know how many times a day 500.000 AI prompts are reached, but I’m sure it is more than twice or even thrice. As time moves on it will be much more than that. It will probably outdo the number of actual flights between London and New York in a day. Every day. It will probably also catch up to whatever energy cost it took to train the AI in the first place and surpass it.

Because you know. People need their memes and fake movies and AI therapist chats and meal suggestions and history lessons and a couple of iterations on that book report they can’t be fucked to write. One person can easily end up prompting hundreds of times in a day without even thinking about it. And if everybody starts using AI to think for them at work and at home, it’ll end up being many, many, many flights back and forth between London and New York every day.

I had the discussion regarding generated CO2 a while ago here, and with the numbers my discussion partner gave me, the calculation said that the yearly usage of ChatGPT is appr. 0.0017% of our CO2 reduction during the covid lockdowns - chatbots are not what is kiling the climate. What IS killing the climate has not changed since the green movement started: cars, planes, construction (mainly concrete production) and meat.

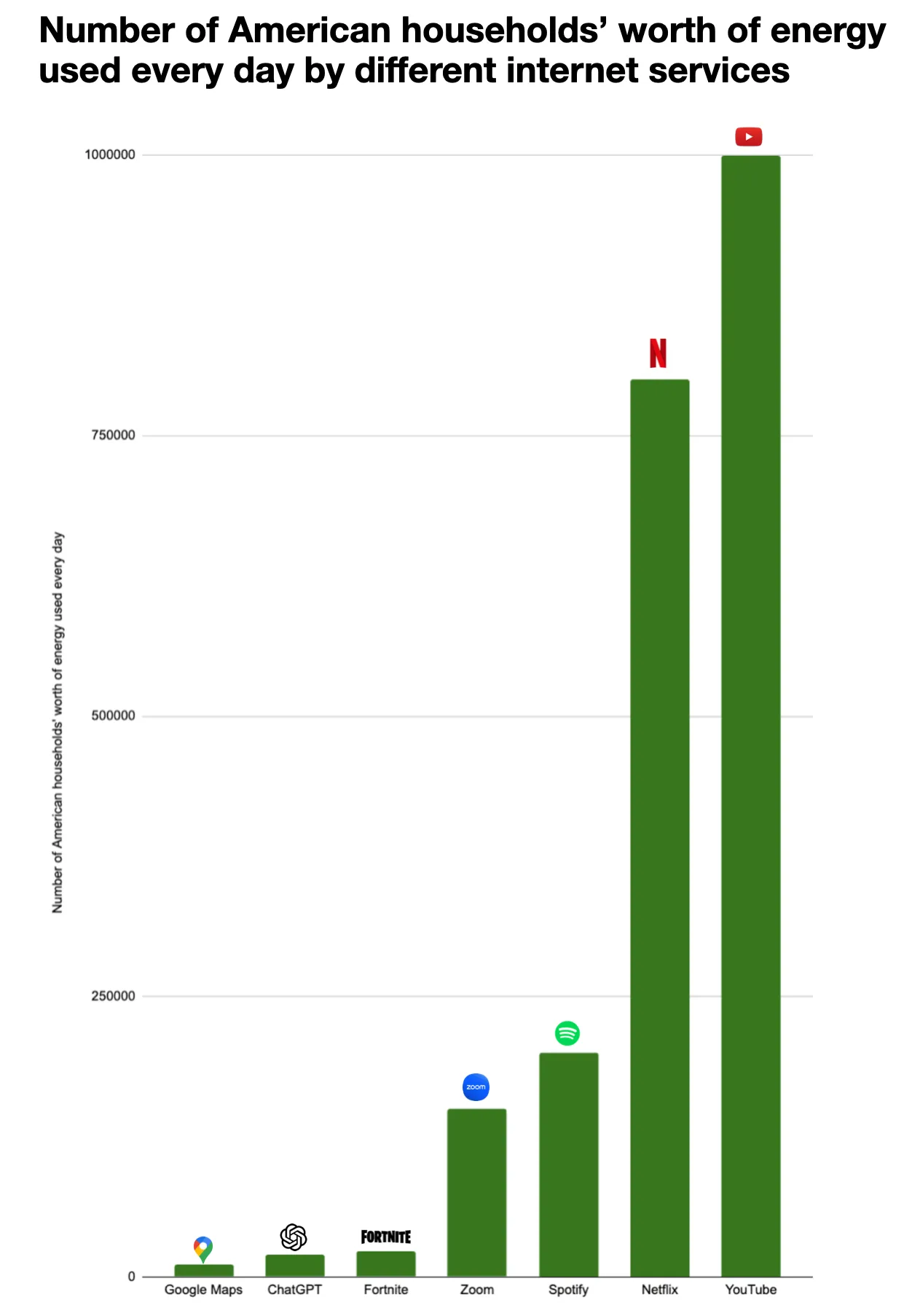

The exact energy costs are not published, but 3Wh / request for ChatGPT-4 is the upper limit from what we know (and thats in line with the appr. power consumption on my graphics card when running an LLM). Since Google uses it for every search, they will probably have optimized for their use case, and some sources cite 0.3Wh/request for chatbots - it depends on what model you use. The training is a one-time cost, and for ChatGPT-4 it raises the maximum cost/request to 4Wh. That’s nothing. The combined worldwide energy usage of ChatGPT is equivalent to about 20k American households. This is for one of the most downloaded apps on iPhone and Android - setting this in comparison with the massive usage makes clear that saving here is not effective for anyone interested in reducing climate impact, or you have to start scolding everyone who runs their microwave 10 seconds too long.

Even compared to other online activities that use data centers ChatGPT’s power usage is small change. If you use ChatGPT instead of watching Netflix you actually safe energy!

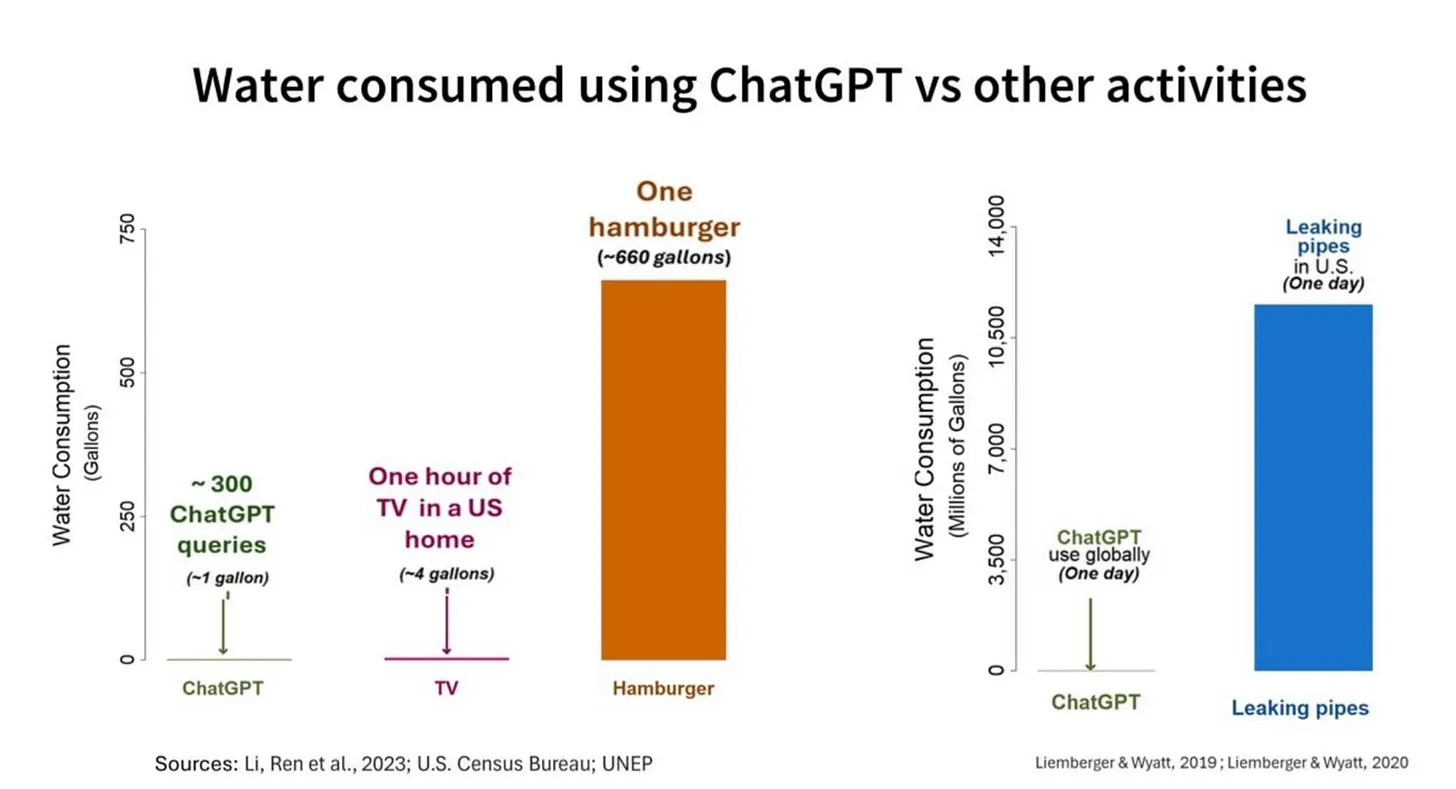

Water is about the same, although the positioning of data centers in the US sucks. The used water doesn’t disappear tho - it’s mostly returned to the rivers or is evaporated. The water usage in the US is 58,000,000,000,000 gallons (220 Trillion Liters) of water per year. A ChatGPT request uses between 10-25ml of water for cooling. A Hamburger uses about 600 galleons of water. 2 Trillion Liters are lost due to aging infrastructure. If you want to reduce water usage, go vegan or fix water pipes.

Read up here!

I have a hard time believing that article’s guesstimate since Google (who actually runs these data centers and doesn’t have to guess) just published a report stating that the median prompt uses about a quarter of a watt-hour, or the equivalent of running a microwave oven for one second. You’re absolutely right that flights use an unconscionable amount of energy. Perhaps your advocacy time would be much better spent fighting against that.

And Google would never lie about how much energy a prompt costs, right?

Especially not since they have an invested interest in having people use their AI products, right?

That’s not really Google’s style when it comes to data center whitepapers. They did, however, omit all information about training energy use.

Ahahah. Not their style to lie and betray people for profit? Get out!

… They’re kind of governed by law about what things they’re allowed to tell their stockholders.

And before you try to say otherwise, yes, laws that protect the ownership class are still being enforced.

Sam Altman, or whatever fuck his name is, asked users to stop saying please and thank you to chatgpt because it was costing the company millions. Please and thank you are the less power hungry questions chatgpt gets. And its costing chatgpt millions. Probably 10s of millions of dollars if the CEO made a public comment about it.

You’re right training is hella power hungry, but even using gen ai has heavy power costs

I’m pretty sure it’s a product of scale, but also, GPT5 is markedly worse. I heard estimates of 40 watt hours for a single medium length response. Napkin math says my motorcycle can travel about kilometer per single medium length response of GPT5. Now multiply that by how many people are using AI (anyone going online these days), now multiply that by how many times a day each user causes a prompt. Now multiply that by 365 and we have how much power they’re using in a year.